16 Agents, 5,000 Tokens Per Second, 13 Seconds to a Complete App

I've been fascinated by Groq for a while now. They build specialized hardware — LPUs — that output tokens at speeds you can't really compare to traditional GPU clusters. On top of that, there are the smaller open-source models: Scout at 17 billion parameters, Maverick also at 17B. No GPT-4, no Claude. Lean, fast, focused.

The question that interested me: How fast can you actually build a complete app if you don't have one large model do everything sequentially — but instead have many small models work in parallel? An agentic network where each agent has a clearly defined task. One writes the types, one the routes, one the API client, one the socket handling. All at the same time.

I built an orchestrator in Rust and iterated through eight versions. The result: 16 agents write a complete chat app in 13 seconds — 34 files, over 2,200 lines of code, with a combined throughput of over 5,000 tokens per second.

What makes Groq different

Groq hardware isn't just a faster GPU. LPUs are chips built specifically for inference. The models are smaller than what you know from OpenAI or Anthropic — 17B instead of 70B or 175B parameters. That means less reasoning depth per agent, but massively more speed.

My thinking was: if a single 17B model delivers maybe 80% of the code quality of a large model, can you compensate through specialization? If each agent only has a small, clearly defined task — two or three files with a clear scope — then 17B should be enough.

That was the hypothesis. Whether it works, I had to find out.

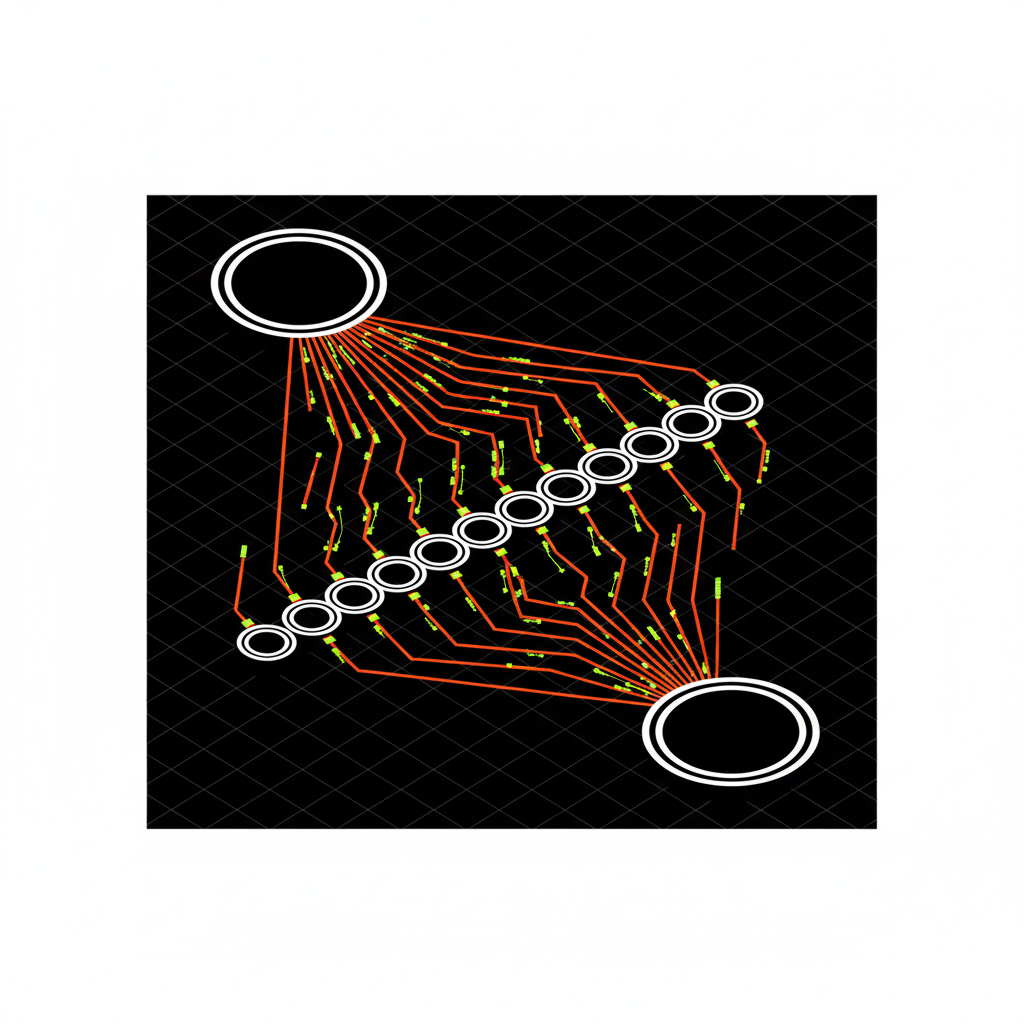

The setup: Three-Pass DAG

The orchestrator breaks down a natural-language app description into three sequential phases:

graph TD

SPEC["Spec"] --> PLAN["Plan · ~2s"]

PLAN --> P0["Types · ~2s"]

P0 --> P1["8-14 Agents · ~6s"]

P1 --> P2["Wiring · ~2s"]

P2 --> VAL["Validate · ~1.5s"]

VAL --> OUT["34 Files"]

style P0 fill:#FF3D00,stroke:#fff,stroke-width:2px,color:#fff

style P1 fill:#FF3D00,stroke:#fff,stroke-width:2px,color:#fff

style P2 fill:#FF3D00,stroke:#fff,stroke-width:2px,color:#fff

style PLAN fill:#1A1A1A,stroke:#C8FF00,stroke-width:2px,color:#fff

style VAL fill:#1A1A1A,stroke:#C8FF00,stroke-width:2px,color:#fff

style SPEC fill:#1A1A1A,stroke:#fff,stroke-width:2px,color:#fff

style OUT fill:#1A1A1A,stroke:#fff,stroke-width:2px,color:#fffPhase 1: Maverick-17B analyzes the spec and decides how many agents are needed. About two seconds.

Pass 0: A single types agent generates the central shared/types.ts — the file all other agents will reference. Without it, each agent invents its own definitions.

Pass 1: 8 to 14 feature agents run simultaneously. Each gets the types file as context. Six seconds later, all files are done.

At the end, a validator checks consistency — do imports match, do types fit together, are there duplicate routes. The whole thing takes 13.3 seconds total.

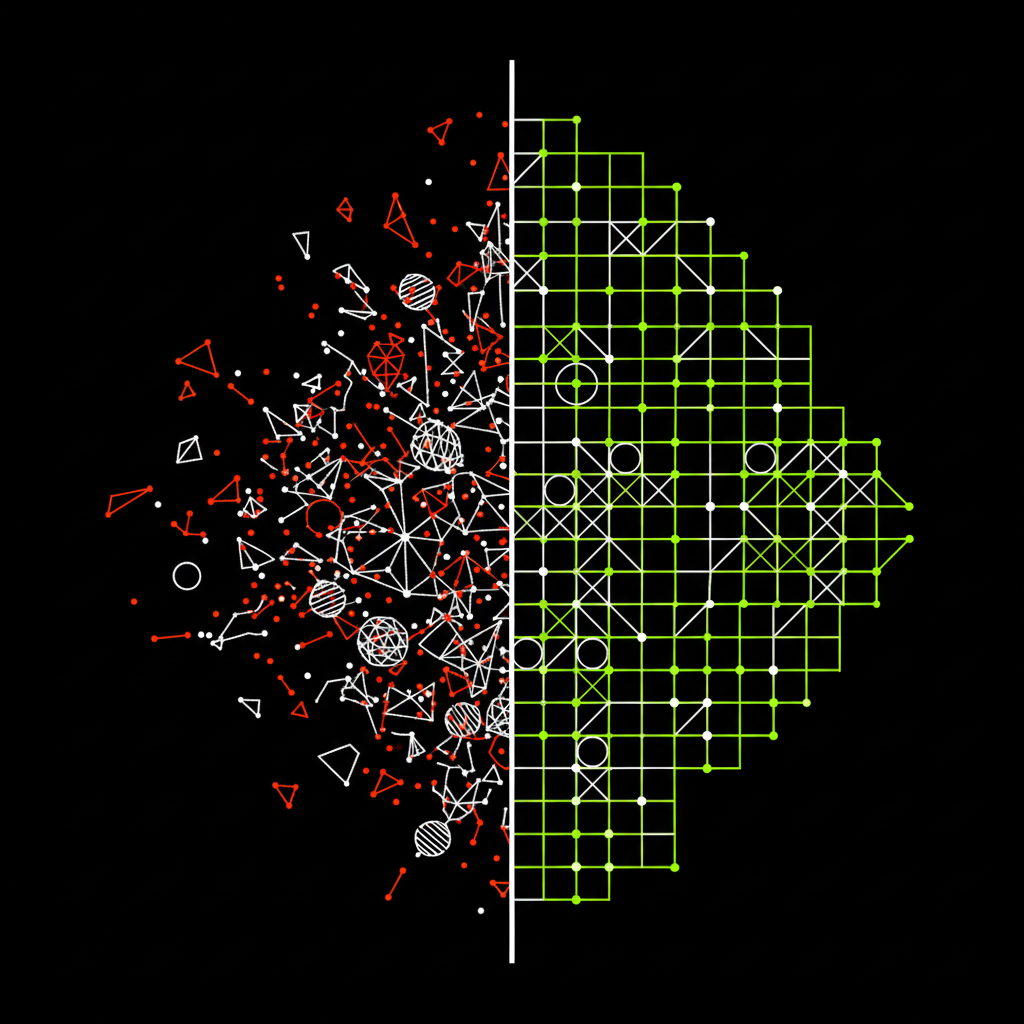

Eight versions until it worked

The path there wasn't linear. Eight versions, each with its own problems.

The first attempts with four fixed agents (v3) produced usable but incomplete code. The responsibilities were too broad — one agent for "frontend" is too much for a 17B model.

From v4 on, the orchestrator dynamically decided how many agents were needed. That helped, but a fundamental problem persisted until v6: the central types file only existed as a prompt description, not as an actual generated file.

graph TD

V3["v3 · B- · 4 Ag"] --> V4["v4 · B+ · 8 Ag"]

V4 --> V5["v5 · A- · 12 Ag"]

V5 --> V6["v6 · A · 19 Ag"]

V6 --> V7["v7 · A+ · 12 Ag"]

V7 --> V8["v8 · A+ · 16 Ag"]

style V3 fill:#1A1A1A,stroke:#fff,stroke-width:2px,color:#fff

style V4 fill:#1A1A1A,stroke:#fff,stroke-width:2px,color:#fff

style V5 fill:#1A1A1A,stroke:#C8FF00,stroke-width:2px,color:#fff

style V6 fill:#1A1A1A,stroke:#C8FF00,stroke-width:2px,color:#fff

style V7 fill:#FF3D00,stroke:#fff,stroke-width:2px,color:#fff

style V8 fill:#FF3D00,stroke:#fff,stroke-width:2px,color:#fffv3–v6: Agent count grew, but code quality fluctuated. Without a real types file, imports never matched.

v7: A dedicated types agent in pass 0 creates the file as real output. Consistency score hit 1.0 for the first time.

v8: Deterministic import paths, automatic exports, code pattern injection — the fine-tuning that eliminated the last errors.

The real insight: Take away from the LLM what's computable

The biggest leap didn't come from better prompts, but from less LLM work.

The insight sounds simple: everything that's deterministically computable shouldn't come from the LLM. Import paths can be calculated by Rust. Export keywords can be added by a regex. Code patterns for Express routes or socket handlers can be injected as templates.

Four concrete fixes in v8 made the difference:

First: ensure_exports() — a Rust regex that automatically adds export before every declaration in the types file. The LLM regularly forgets this. The regex solves it at 100%, costs zero tokens.

Second: deterministic import paths. Instead of letting the LLM guess whether the path is ../../shared/types or ../../../shared/types, Rust computes the correct relative path from source and target position.

Third: a structured export map. Services export instances, not classes. Routes export routers. Clear rules instead of LLM interpretation.

Fourth: code pattern injection by file type. An Express route gets a concrete snippet with Router() and relative paths. The LLM doesn't have to invent the structure, just fill in the business logic.

The difference: if you tell the LLM "Use relative paths," it occasionally ignores that. If you give it a concrete code snippet, it implements it correctly almost every time.

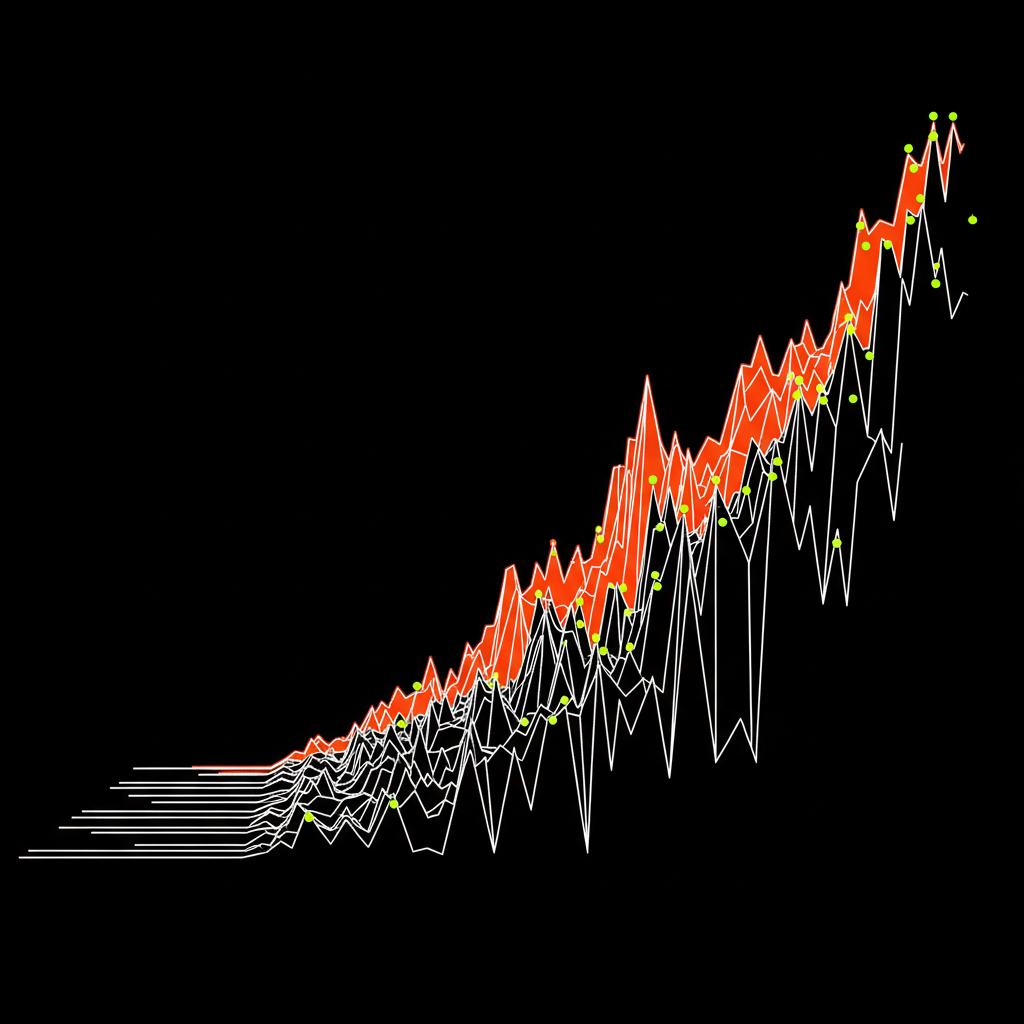

What came out of it

The chat app from the benchmark has rooms, messages, auth, Socket.IO integration, and a notification system. Not a toy app.

The numbers from v8:

- 34 files with a total of 2,217 lines of code

- 13.3 seconds total time

- Combined throughput: over 5,300 tokens per second

- Build phase alone: ~6,300 tok/s

- 16 agents in parallel on Scout-17B

- Consistency score: 1.0 — all imports match, all types fit

The parallel speedup is 4.78x — not 16x, because pass 0 and pass 2 must run sequentially and Groq has concurrency limits. The theoretical maximum would be somewhere around 6-8x.

What doesn't work yet

React components are still tricky. JSX is too creative for templates, and type imports are sometimes missing. Controllers occasionally call service methods with wrong arguments. And the DB layer is inconsistent — sometimes Sequelize, sometimes raw SQL, sometimes MongoDB-style in the same project.

These aren't unsolvable problems, but they show where 17B models hit their limits: tasks that need more context and reasoning than a few files.

What's next

The next step is a cartridge system: Rust-based templates that provide the file skeleton — imports, exports, structure — and the LLM only fills in the business logic slots. That reduces the LLM portion per file to maybe 30-40% of the code.

Long-term, a meta-agent will create new templates when no existing one fits, and learn from validator feedback. The system then improves with every run. But that's still future work.